You might expect that the auditory system in the brain is critical for hearing and perceiving speech and that the motor system is critical for the production of speech. And this is the case. However, research in sensorimotor integration has shown that the reverse is also true. The auditory system is involved in speech production and the motor system can be involved in the perception of speech.

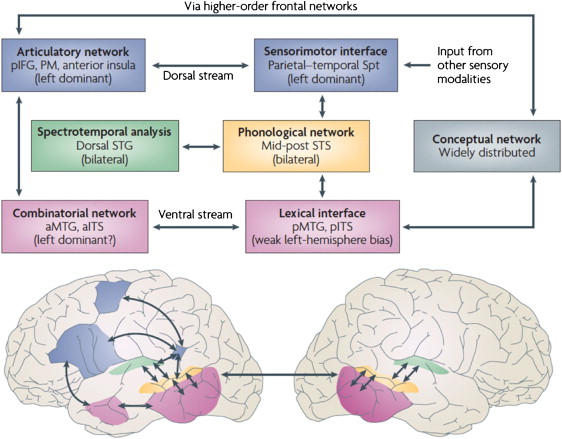

Figure 1. Illustration of the dual stream model of speech processing. In this model, early stages of speech processing occur bilaterally in auditory regions on the dorsal superior temporal gyrus (spectrotemporal analysis; green) and superior temporal sulcus (phonological access/representation; yellow). From there the signals diverge into two broad streams. The temporal lobe ventral stream supports speech comprehension (lexical access and combinatorial processes; pink) whereas a strongly left dominant dorsal stream supports sensory-motor integration and involves structures at the parietal-temporal junction (Sylvian parietal-temporal) and frontal lobe. Figure 3 from “Sensorimotor Integration in Speech Processing: Computational Basis and Neural Organization” by Gregory Hickok, John Houde and Feng Rong. Neuron, February 10, 2011.

Research scientists studying speech production have shown that a goal of speech production is to generate a target sound. On the other hand, researchers studying speech perception have shown that a goal of speech perception is to recover the motor gesture that generated a perceptual speech event. The authors of a new paper “Sensorimotor Integration in Speech Processing: Computational Basis and Neural Organization” (published February 10, 2011 in Neuron) point out that “there is virtually no theoretical interaction between them.” This paper is an attempt to bring speech production and speech perception research findings together into a single framework to address sensorimotor interaction in speech.

In this paper, the authors review the evidence for:

- The role of the auditory system in speech production.

- Evidence for the role of the motor system in speech perception.

- Recent progress in mapping an auditory-motor integration circuit for speech and related functions (summarized in Figure 1 above).

Figure 2. An integrated state feedback control model of speech production. Articulatory control localized to primary motor cortex generates motor commands to the vocal tract and sends a corollary discharge to an internal model which makes forward predictions about both the dynamic state of the vocal tract and about the sensory consequences of those states. Deviations between predicted auditory states and the intended targets or actual sensory feedback generate error signals that are used to correct and update the internal model of the vocal tract. The motor phonological system embodies an internal model of the vocal tract and is localized to premotor cortex. The auditory phonological system encodes auditory targets and forward predictions of sensory consequences and is localized to the superior temporal gyrus and superior temporal sulcus. Motor and auditory phonological systems are linked via an auditory-motor translation system, localized to the Sylvian parietal-temporal area. Figure 4 from “Sensorimotor Integration in Speech Processing: Computational Basis and Neural Organization” by Gregory Hickok, John Houde and Feng Rong. Neuron, February 10, 2011.

They then go on to consider a unified framework based on a state feedback control architecture (summarized in Figure 2 above), in which sensorimotor integration functions primarily in support of speech production. These include the capacity to learn how to articulate the sounds of one’s language, keep motor control processes tuned, and support online error detection and correction. The system can provide some top-down motor modulation perceptual processes.